Introduction to Deep Learning provides a rigorous, concept-driven introduction to the models that power modern AI systems—from image recognition to large language models. You’ll build neural networks from first principles, understanding how forward passes, loss functions, and backpropagation enable learning. As the course progresses, you’ll train and regularize deep models, design convolutional networks for vision, model sequences with RNNs, LSTMs, and attention, and apply transformer-based architectures such as BERT, GPT, and Vision Transformers. You will also look at the latest trends in contrastive learning and CLIP. By combining mathematical foundations with practical application, this course equips you to understand, train, and use deep learning models with confidence.

Introduction to Deep Learning

Introduction to Deep Learning

This course is part of Machine Learning: Theory and Hands-on Practice with Python Specialization

Instructor: Daniel E. Acuna

Access provided by Masterflex LLC, Part of Avantor

Recommended experience

What you'll learn

Explain the mathematical foundations of neural networks and how they learn from data.

Train and regularize deep neural networks for effective generalization.

Design and apply specialized neural network architectures for images and sequences.

Apply transformer-based and multimodal models to real-world scenarios.

Skills you'll gain

- Network Model

- PyTorch (Machine Learning Library)

- Natural Language Processing

- Network Architecture

- Recurrent Neural Networks (RNNs)

- Artificial Intelligence and Machine Learning (AI/ML)

- Keras (Neural Network Library)

- Large Language Modeling

- Vision Transformer (ViT)

- Embeddings

- Skills section collapsed. Showing 6 of 10 skills.

Details to know

Add to your LinkedIn profile

6 assignments

January 2026

See how employees at top companies are mastering in-demand skills

Build your subject-matter expertise

- Learn new concepts from industry experts

- Gain a foundational understanding of a subject or tool

- Develop job-relevant skills with hands-on projects

- Earn a shareable career certificate

There are 5 modules in this course

Welcome to Introduction to Deep Learning. This module builds the mathematical foundations of neural networks. Starting from linear models, you will learn about the artificial neuron and develop the mathematics of gradient descent and backpropagation. The focus is on understanding how and why neural networks work through the underlying math—covering the forward pass, loss functions, and the chain rule to show how information flows through networks and how they learn from data.

What's included

15 videos5 readings2 assignments1 programming assignment

This module focuses on training neural networks effectively. Topics include optimization algorithms, hyperparameter tuning, and regularization techniques to prevent overfitting and achieve good generalization. You will compare different optimizers like SGD, momentum, and Adam, understand how learning rate and batch size affect training dynamics, and apply weight decay, dropout, early stopping, and batch normalization.

What's included

7 videos2 readings1 assignment1 programming assignment

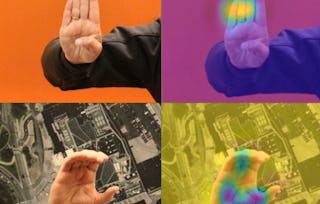

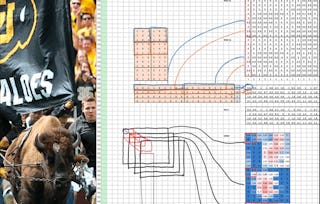

This module introduces you to convolutional neural networks (CNNs), the foundation of modern computer vision. Topics include how convolutional and pooling layers work, CNN architecture design, and practical techniques like data augmentation and transfer learning. The module covers classic architectures like VGG and ResNet and explains why CNNs outperform fully-connected networks on image data.

What's included

7 videos2 readings1 assignment1 programming assignment

This module covers sequence modeling, starting with recurrent neural networks (RNNs) and long short-term memory networks (LSTMs), then progressing to the attention mechanism—the key innovation that led to transformers. Topics include how RNNs maintain hidden states across time steps, why the vanishing gradient problem motivated LSTMs, and how attention allows models to focus on relevant parts of their input.

What's included

7 videos1 reading1 assignment1 programming assignment

This final module covers the transformer architecture, which has revolutionized deep learning across domains. Topics include BERT and GPT as encoder-only and decoder-only variants, Vision Transformers (ViT) that apply attention to images, and CLIP for multimodal learning connecting vision and language. The module emphasizes applying pre-trained models to real tasks.

What's included

8 videos1 reading1 assignment1 programming assignment

Earn a career certificate

Add this credential to your LinkedIn profile, resume, or CV. Share it on social media and in your performance review.

Instructor

Offered by

Why people choose Coursera for their career

Felipe M.

Jennifer J.

Larry W.

Chaitanya A.

Explore more from Data Science

University of Colorado Boulder