Master the complete fine-tuning pipeline—from transformer internals to production deployment—using memory-efficient techniques that run on consumer hardware.

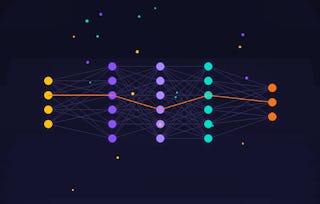

This course transforms you from someone who uses large language models into someone who customizes them. You'll learn to fine-tune 7-billion parameter models on a laptop GPU using QLoRA, which reduces memory requirements from 56GB to just 4GB through intelligent quantization and low-rank adaptation. What sets this course apart is its rigorous, scientific approach. You'll apply Popperian falsification methodology throughout: instead of asking "does my model work?", you'll systematically try to break it. This skeptical mindset—testing tokenization edge cases, running rank ablation studies, and validating corpus quality through six falsification categories—builds the critical thinking skills that separate production-ready engineers from those who ship fragile systems. By course end, you'll confidently: calculate VRAM requirements and select appropriate hardware; trace inference through the six-step transformer pipeline; configure LoRA rank to match task complexity; build quality training corpora using AST extraction; and publish datasets to HuggingFace with proper splits and documentation. Built entirely on a sovereign AI stack, everything runs locally with no external dependencies—true ML independence.