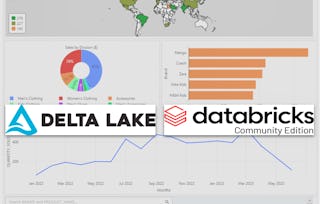

In today’s data-driven world, building scalable and efficient data applications is crucial for staying ahead in business and technology. This course explores the power of Databricks Lakehouse, a unified platform for managing and analyzing large volumes of data, and guides you through essential skills to create modern data applications.

Building Modern Data Applications Using Databricks Lakehouse

Building Modern Data Applications Using Databricks Lakehouse

Instructor: Packt - Course Instructors

Access provided by Xavier School of Management, XLRI

Recommended experience

What you'll learn

Deploy near-real-time data pipelines using Delta Live Tables

Orchestrate data pipelines with Databricks workflows

Implement data validation and monitor data quality

Skills you'll gain

Details to know

Add to your LinkedIn profile

10 assignments

December 2025

See how employees at top companies are mastering in-demand skills

There are 10 modules in this course

In this section, we explore real-time data pipelines with Delta Live Tables (DLT), analyze Delta Lake architecture, and design scalable streaming solutions for lakehouse environments

What's included

2 videos6 readings1 assignment

In this section, we cover ingesting data with DLT, applying changes, and configuring pipelines for scalability.

What's included

1 video6 readings1 assignment

In this section, we explore implementing data quality expectations in DLT pipelines, validating data integrity with temporary datasets, and quarantining poor-quality data for correction.

What's included

1 video4 readings1 assignment

In this section, we cover scaling DLT pipelines through cluster optimization, autoscaling, and Delta Lake techniques.

What's included

1 video4 readings1 assignment

In this section, we explore implementing data governance in a lakehouse using Unity Catalog, focusing on access controls, data discovery, and lineage tracking for compliance and security.

What's included

1 video7 readings1 assignment

In this section, we cover managing data storage locations in Unity Catalog with secure governance and access control.

What's included

1 video3 readings1 assignment

In this section, we explore data lineage in Unity Catalog, tracing origins, visualizing transformations, and identifying dependencies to ensure data integrity and proactive issue detection.

What's included

1 video3 readings1 assignment

In this section, we cover deploying and managing DLT pipelines using Terraform in Databricks.

What's included

1 video4 readings1 assignment

In this section, we explore Databricks Asset Bundles (DABs) for streamlining data pipeline deployment, emphasizing GitHub integration, version control, and cross-team collaboration.

What's included

1 video4 readings1 assignment

In this section, we explore monitoring data pipelines using Databricks, focusing on health, performance, and data quality. Techniques include DBSQL alerts and webhook triggers for real-time issue resolution.

What's included

1 video4 readings1 assignment

Instructor

Offered by

Why people choose Coursera for their career

Felipe M.

Jennifer J.

Larry W.